Implementing Retry and Circuit Breaker pattern together with Spring Boot

There are several microservices patterns which helps us to manage downstream service failures. Among all these patterns, Circuit Breaker and RETRY pattern are very popular and are widely used in a microservice ecosystem.

What is Circuit Breaker.?

The concept of the circuit breaker pattern is borrowed from the field of electronics. If you’ve ever had to reset the electric breaker panel switches in your house after an electrical storm or power surge, you are already familiar with the concept. The circuit breaker is meant to protect a system from total failure by stopping the propagation of electricity that can overload the system or cause further damage to other subsystems.

When the remote service is down and thousands of clients are simultaneously trying to use the failing service, eventually all the resources get consumed. Circuit breaker pattern helps us in these kinds of scenarios by preventing us from repeatedly trying to call a service or a function that will likely fail and prevent waste of CPU cycles.

What is RETRY pattern.?

The Retry pattern in microservices is a design pattern that helps improve the stability and reliability of communication between microservices by automatically retrying failed requests. In microservices architecture, services often need to communicate with each other over the network. However, network requests can fail due to various reasons such as temporary network issues, high loads, or service unavailability.

The Retry pattern addresses these transient failures by allowing the client service to automatically retry the failed request after a short delay. The idea is that the failure might be temporary, and the request could succeed if retried after a brief waiting period. Retrying the request multiple times increases the chances of it succeeding, especially in scenarios where the failure is intermittent or short-lived.

Working of a Circuit Breaker

The three states in Circuit Breaker pattern are CLOSED , OPEN and HALF OPEN as you see on the below diagram.

The circuit breaker initially operates in the CLOSED state. When a service tries to communicate with another service and the other system fails to respond, the circuit breaker keeps track of these failures. When the number of failures is above a certain threshold it goes into OPEN state. No further requests can be requested from the upstream as long as the service is in OPEN state. Meanwhile the circuit breaker may also help the system degrade gracefully by using some fallback mechanism, by returning some cached or default response to the caller. After a specified wait duration the circuit breaker goes into HALF_OPEN state and tries to reconnect to the service downstream. Again if the failure rate is above a certain threshold it goes back into OPEN state, else it goes into CLOSED state and returns to normal mode of operation.

Implementation of the Retry and Circuit Breaker in a Spring application

We can combine the Retry and Circuit Breaker patterns in a way that retries are performed before the Circuit Breaker threshold is reached. This ensures that transient network issues are automatically retried, but if the issues persist, the Circuit Breaker will eventually open to prevent continuous retries.

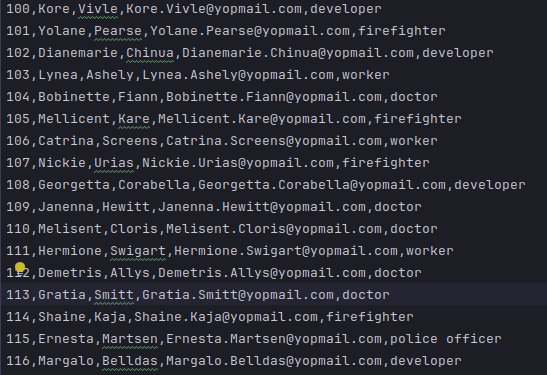

We have a created a demo project (https://github.com/nitsbat/circuit-breaker) in which we have two service : people-service being the upstream service which will provide data to the downstream service i.e microservice. The people service will provide data of all the people who are doctors. The people data will look like this.

To send the above data, we have exposed a very simple api through the people-service controller shown below so that the microservice can listen from this endpoint. Please refer the github link for the complete code.

@GetMapping("/people")

public ResponseEntity<List<People>> getDoctors() {

Resource resource = resourceLoader.getResource("classpath:people.csv");

try (CSVReader csvReader = new CSVReader(new FileReader(resource.getFile().getPath()))) {

return ResponseEntity.ok().body(getPeopleFromCSV(csvReader));

} catch (Exception e) {

throw new RuntimeException(e);

}

}We have designed our main service i.e microservice using feign client as it simplifies the process of defining and invoking HTTP requests to external services by creating a more intuitive and Java-friendly interface. We created an feign interface which will be responsible to fetch the above data present in people-service.

@FeignClient(name = "people-api", url = "${http://localhost:8080/people}")

public interface DataProxy {

Logger log = LoggerFactory.getLogger(DataProxy.class);

@GetMapping

@Retry(name = "peopleProxyRetry")

@CircuitBreaker(name = "peopleProxyCircuitBreaker", fallbackMethod = "serviceFallbackMethod")

ResponseEntity<List<People>> getCurrentUsers();

default ResponseEntity<List<People>> serviceFallbackMethod(Throwable exception) {

log.error(

"Data server is either unavailable or malfunctioned due to {}", exception.getMessage());

throw new RuntimeException(exception.getMessage());

}

}We have provided all the configurations of retry and circuit breaker through application.properties. We have also provided the fallback method to Circuit Breaker which will be invoked when the circuit is in the open state and is blocking requests to a failing service. The purpose of the serviceFallbackMethod is to provide a graceful degradation of service when the Circuit breaker is in a protective state and requests cannot be fulfilled due to service unavailability or failure. We will be looking at the properties of both the retry and circuit breaker. Feel free to skip this if you are already aware of the properties used in RETRY and CB.

Properties of RETRY

resilience4j.retry.configs.default.maxAttempts=4

resilience4j.retry.configs.default.waitDuration=1000

resilience4j.retry.configs.default.enableExponentialBackoff=true

resilience4j.retry.configs.default.exponentialBackoffMultiplier=2

resilience4j.retry.instances.peopleProxyRetry.baseConfig=default- maxAttempts : This property specifies the maximum number of retry attempts that should be made for a failed operation. Once this limit is reached, the operation is considered as failed.

- waitDuration : The delay between each retry attempt, which can be a fixed delay or follow an exponential backoff strategy. Exponential backoff increases the delay with each subsequent retry to avoid overloading the service.

- enableExponentialBackoff : This will enable the use of exponential backoff strategy rather than fixed delay.

- exponentialBackoffMultiplier : This multiplier is used in calculating the waiting time. The formula goes as

wait_interval = base * (multiplier ^ (retry_count - 1).In our scenario the following will be the waiting time :

For first retry :

waiting time = 1000*2^0 = 1000ms

For second retry :

waiting time = 1000*2^1 = 2000 ms

For third retry :

waiting time = 1000*2^2 = 4000 ms

As 4 calls have already been made and maxAttempt is 4, after the third retry will stop and circuit breaker will start.Properties of Circuit Breaker

resilience4j.circuitbreaker.configs.default.registerHealthIndicator=true

resilience4j.circuitbreaker.configs.default.slidingWindowType=COUNT_BASED

resilience4j.circuitbreaker.configs.default.slidingWindowSize=6

resilience4j.circuitbreaker.configs.default.minimumNumberOfCalls=4

resilience4j.circuitbreaker.configs.default.failureRateThreshold=50

resilience4j.circuitbreaker.configs.default.permittedNumberOfCallsInHalfOpenState=3

resilience4j.circuitbreaker.configs.default.waitDurationInOpenState=PT30S

resilience4j.circuitbreaker.configs.default.automaticTransitionFromOpenToHalfOpenEnabled=true

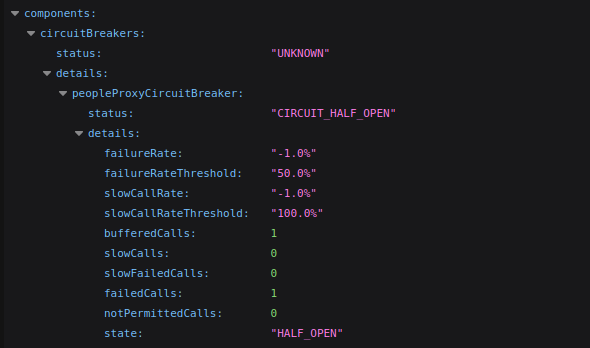

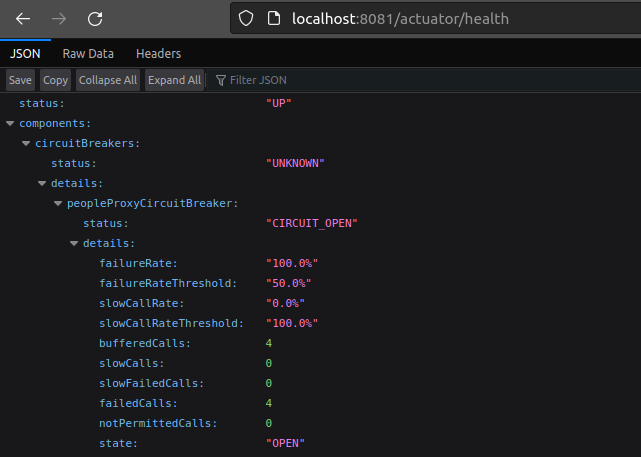

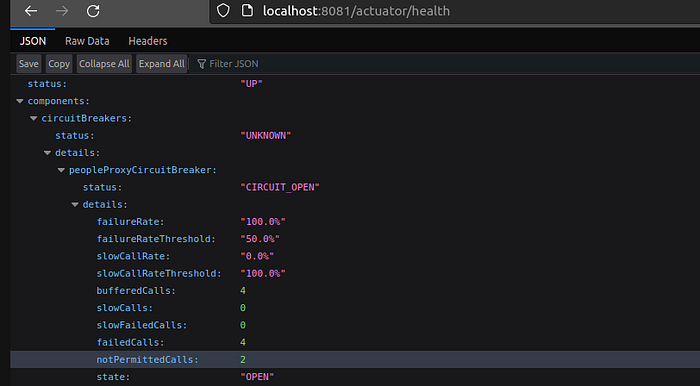

resilience4j.circuitbreaker.instances.peopleProxyCircuitBreaker.baseConfig=default- registerHealthIndicator : This is very important property if you want to observe your circuit breaker and debug whether the properties are working perfectly. It register a health indicator with your application’s health endpoint. It gives the metrics as shown below :

2. slidingWindowType : This property describes the strategy used from time-based or count-based windows, to collect data and make decisions about whether to open or close the Circuit Breaker.

3. slidingWindowSize : For Count Based sliding window , it refers to a fixed or variable number of calls over which metrics are collected and analyzed to make decisions about the state of the Circuit Breaker (open, closed, or half-open). In Time Based sliding window, it refers to a fixed duration of time over which metrics are collected.

4. failureRateThreshold : It is the most important property which determines the threshold or limit on the proportion of failed requests or operations that can occur within a sliding window period before the Circuit Breaker takes action. This threshold is often used to make decisions about whether the Circuit Breaker should open, close, or transition to a half-open state.

5. minimumNumberOfCalls : It specifies the minimum number of consecutive failures or errors required before the circuit breaker “trips” or opens. For example, if you set this value to 5, it means that the circuit breaker will only open after observing a minimum of 5 consecutive failures or errors in requests to the service. This parameter prevents the circuit breaker from opening due to isolated or transient issues.

6. waitDurationInOpenState :It specifies the amount of time that the circuit breaker should remain in the open state before transitioning to the half-open state. After the specified time in the open state, the CB transitions to the half-open state to test the service's recovery.

7. permittedNumberOfCallsInHalfOpenState : It refers to the maximum number of test or trial requests that are allowed to pass through the Circuit Breaker when it is in a half-open state. In the half-open state, the Circuit Breaker transitions from being fully open to partially open, allowing a limited number of requests to be sent to the previously failing service to check if it has recovered.

8. automaticTransitionFromOpenToHalfOpenEnabled : It determines whether the CB should automatically transition from the open state to the half-open state after a specified duration, without waiting for external triggers or interventions. We have set it true for the automatic transition to Half Open state.

RETRY and Circuit Breaker implementation in action

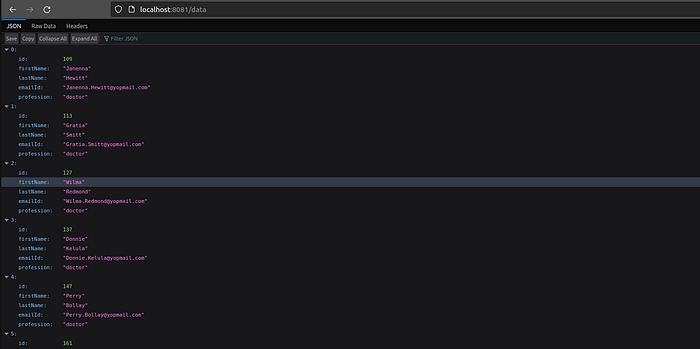

Before trying different scenarios let’s check whether our main service is able to fetch all the doctor records from the people-service. Just hit the http://localhost:8081/data from the main service and you will get records like below :

Awesome it works. Let’s now dive into different scenarios and let’s verify the results.

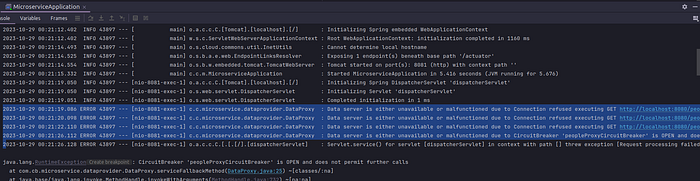

Case I : Let’s stop our people-service and observe how the main application i.e microservice works. After stopping the people service, you will find the main service retries 3 times after some interval of time. You can verify either by the logs or by putting the debug point in the service fallback method.

We can clearly see that the service callback has been called four times as the exception is logged four times. Also note down the timestamp difference from the logs between these exceptions. You can see the same difference we calculated above in properties of retry section. Can you guess what else would have happened in the above case.?

Yes, our circuit breaker has also been gone into the open state as failure threshold was 50% and in our case as all the case have failed our failure rate is 100% as shown below :

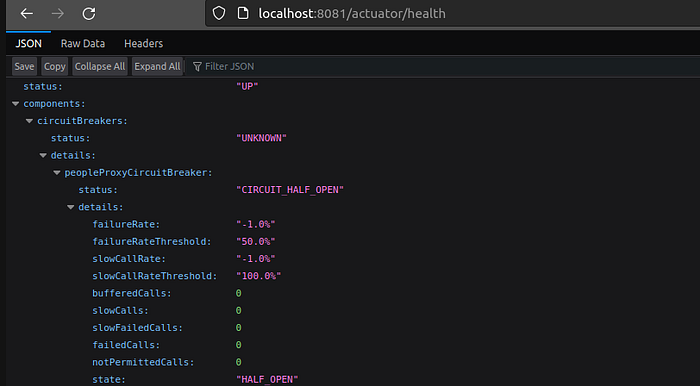

Note : Irrespective of more calls or no calls, after 30 secs the circuit will go into Half_Open_State by itself because we have set the automaticTransitionFromOpenToHalfOpenEnabled as true.

Case II : Let’s take another scenario from the above example only what would have happened after 3 calls failure. Would the circuit been in Closed or Open state.?

If your answer is Closed state then it is correct as we have mentioned the minimum number of calls as 4.

Case III : Think of the same scenario as of above and the people-service is still down or throwing exceptions, guess what will happen to the 5th & 6th call from the main service. Yes they will be discarded by the ciruit as shown below.

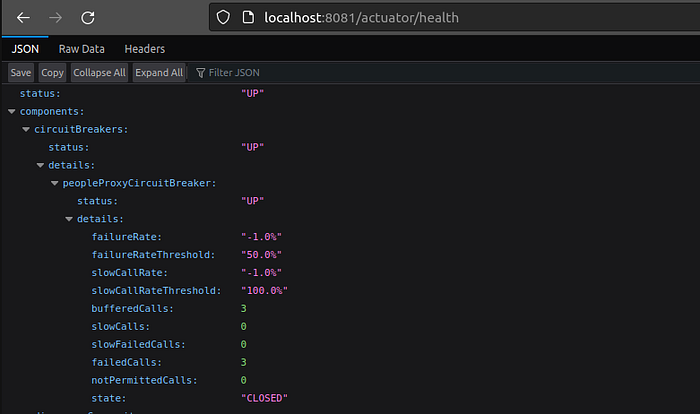

Case IV : Let’s take a simple scenario in which after 30 secs we give a success call (workable people-service) to the main service. In this case the circuit will return to closed state reseting all the metrics. In case of fail calls it will again wait till the failure rate crosses the threshold and if it does it will go and remain in Open state for next 30 secs.

Conclusion

I hope this will help in implementing the circuit breaker in your microservices. There are serveral scenarios in which we don’t want our circuit to trip. Feel free to explore how we can ignore several client/server side errors/exceptions to prevent unnecessary tripping of our circuit breaker in my next article : https://nitsbat.medium.com/preventing-our-circuit-breaker-retry-from-tripping-due-to-certain-exceptions-52a1b7d8a0ca. Also don’t forget to read the best practices used to implement Circuit Breaker with Spring Cloud.